I know I've already blogged about this topic several times, but there's a lot of angles to come at this from and even more reminders that need to be issued. As a DPOS network created by by literal Steemit Incorporated employees... knowing more about Koinos can help us understand our own network a little bit better as well. Why did they leave Hive to create a new thing? Perhaps they know something that we don't, or even more likely signed a non-compete clause that legally prevents them from doing so. To be fair all devs know the joy of abandoning a spaghetti factory project to start out fresh and try to "get it right this time". Untangling spaghetti is a god damn nightmare. Shoutout to our own devs who are putting in the work.

OMFG it works! How does it work? Who cares! It works!

No such thing as free transactions

I'm getting very tired of DPOS devs thinking they've solved the issue of fees with a 2.0 upgrade that is superior in every way to the old system. They absolutely have not and it's really fucking annoying that DPOS devs either don't understand the pros and cons of creating a bandwidth derivative, or they simply don't give a shit and want to shill their own network. Both of these forked scenarios are unacceptable and seem to be the paths we continue walking down to our own detriment.

Yesterday I was on Twitter being a little contrarian bitch as always (my glorious 'new' brand) and I was talking trash about this very issue. Ruffled a few feathers of some people who I don't even know, but seem to be hardcore Koinos fans. That's cool: I hope the network succeeds. That's good for everyone. It's not a competition.

And the reason it's not a competition is because it's impossible for a single decentralized network to scale up and capture the entire shebang. That's the entire point of decentralization; it's wildly inefficient. This is something that maximalists who only shill their own network fail to realize: their network can't do everything. In fact their network is just a tiny piece of a gigantic puzzle. Greed gets in the way of this knowledge, and many refuse to understand it, often deliberately.

It is simply not possible, on a technical level, to create something like a decentralized Facebook. Facebook already had a hard enough time scaling up as it is... now you want to build a redundant system where at least 100 nodes are all storing the same information across the board? Yeah: not possible. Maybe in a decade or two. Maybe. Until then, dream on.

If we look at a network like Hive or Koinos that promises it can scale to the moon: That's just game theory. The actual bandwidth on DPOS chains at in this moment isn't more than Bitcoin. Imagine that. It's simple math:

- Bitcoin: 1-2 megabytes per block... let's say 1.5 on average.

That's 79 gigabytes per year. - Hive: 65 kilobyte max blocksize

651 gigabytes maximum.

Do not show the size of the actual blocks we produce.

Because we're fucking noobs.

Assuming we only fill up 10% of our blocks on average we are processing the exact same amount of data as Bitcoin. Both https://hiveblocks.com/ & https://www.hiveblockexplorer.com/

And this is the point in the conversation that a lot of people (including top 20 witnesses) would counterpoint with the idea that we can increase our blocksize. And to that I say: no, we can not. If anyone is even thinking about increasing the blocksize without first stress-testing the current blocksize they are not to be taken seriously.

Think about how many times critical infrastructure on Hive has just failed and websites were down because this or that thing wasn't working. More often than not, when one frontend to Hive is down (like peakd.com or leofinance.io) they are ALL down. That's inherently centralized bottlenecks getting in the way of robust 99.99999% uptime, which is clearly the uptime that Hive should have (if not even better). We have received zero indications from actual real-world implementation that Hive is ready to scale up any higher than it already is. This should be obvious to anyone that actually uses the product, and yet, it is not obvious somehow. Why is that? Perhaps it's because we are told over and over again how much this thing can scale (in theory). Drinking the koolaid.

You know what network actually does have the ability to increase their blocksize? Bitcoin. The BTC network could easily do it, but they are extremely conservative so it's going to be a while before that actually happens. This is a good thing considering BTC is the backbone of the entire industry. No need to build a bigger anchor when the current one does its job just fine; that would just weigh down the ship.

And on top of all this: Bitcoin ONLY DOES P2P TRANSFERS (in practice). Compare that to something like Hive where I'm going to click "publish" and post this entire monstrosity of a blog post directly to the chain. Think on that for a moment. Bitcoin doesn't have that kind of weight on it. It's far sleeker than that and serves a very important niche.

Then why are BTC fees so expensive?

Because the thing that Bitcoin does (security)... well, they do it exponentially better than anyone else, and the world is willing to pay a premium for that service. Have you seen the hashrate chart? Holy hell, Bitcoin aint never getting rolled back for nothing. No double spends here. No changes to the ecosystem. No bullshit. It does what it was built to do, while many other networks are still trying to find their niche.

But this post isn't even about Bitcoin... is it?

Nah, it's about DPOS and the bandwidth derivatives we are shilling as the ultimate upgrade to on-chain network fees (which they absolutely are not). Everyone seems to be looking at where the ball is today rather than where it's gonna be in ten years, so allow me to elaborate (again).

Here's what's going to happen:

- DPOS chains like Hive and Koinos will gain adoption.

- This adoption happens faster than the network can scale (always).

- The governance token that creates the bandwidth will become too expensive for the average person to get the bandwidth that they need.

- A secondary market will emerge where the bandwidth resource will be sold separate from the governance token.

- We run in to the exact same problem of on-chain fees, except different. In this scenario we create a rent-seeking scenario just like bid bots on Steem. Those with stake sell their bandwidth to those that don't. Arguably this is even worse than on-chain fees.

No one thinks this far...

Why? Because if it actually happens all of us will be millionaires, and the people at the top will be multi-billionaires, so nobody actually cares what happens to the plebs or the future of scaling for the network. Fuck it! We ball! Amirite?

Shit I was more right than I thought!

What does Koinos think about all this?

I decided to do some actual research yesterday instead of just full on know-it-all talking out my ass. Turns out I didn't actually need to do the research as my know-it-all strategy was spot on, but it's nice to have some evidence to back it up.

Two whitepapers! WUT?

Did you know that Koinos actually has a separate whitepaper just for bandwidth allocations (MANA)? Smart, it's a really important topic!

https://koinos.io/unified-whitepaper/

https://koinos.io/mana-whitepaper/

Very similar to resource credits

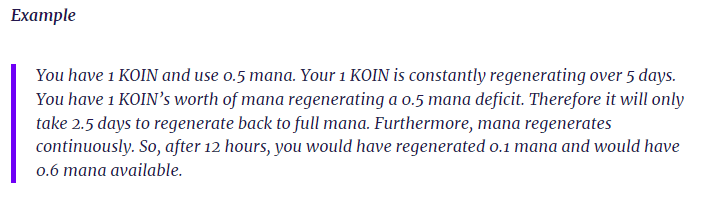

You stake Koin to create mana and post transactions to the chain. Basically that's how Hive does it as well: a timelock is employed to create a derivative bandwidth asset used to post to chain. It even has a 5-day regenerating period just like Hive. Makes sense.

What we have to realize is that given any amount of adoption that stress-tests the network, suddenly this bandwidth acquires a non-zero value. But don't worry about it the Koinos whitepaper has you covered BB!

Ah, you see?

The whitepaper pinky promises that their bandwidth derivative will never have a non-zero value! Thank goodness, I was getting worried! Eh, well, what about delegations?

We can see that Koinos has made some pretty impressive upgrades to the bandwidth derivative yield farming system. The way mana works is arguably superior and more streamlined than Resource Credits on Hive. Again, the reason for this is because they got to build their system from the ground up while we have to continue to build atop the spaghetti factory. However, what we have will still work in exactly the same way, we just might have to jump through a few hoops to get there. The end result will be identical, even if the way they do it is more streamlined.

Being able to charge the receiver the bandwidth cost is really cool because if I launch an app and want to offer completely free service all I have to do is acquire enough stake to subsidize all my users. All they have to do is charge me like a collect-call on a payphone (but what is a payphone, you may be asking). Kudos to Koinos for these new developments; we can learn something from them after all.

The problem?

Well, it's downright childish to make the claim that:

a) You're going to get adoption.

b) The bandwidth will never have value.

They can't stop a secondary market from popping up where users pay for mana directly, and it's foolish to claim otherwise. This is especially true considering how easy it's going to be to delegate MANA and create that secondary MANA; it's already baked directly into the cake. We have to ask ourselves: if they are wrong about this, what else are they wrong about? Whitepapers are largely meaningless in this regard. It never works out the way the founders expect it to.

So what is the advantage of on-chain fees?

Like I said, creating a 'free' derivative asset is not a 2.0 upgrade to the bandwidth problem (although it is a pretty cool implementation IMO), and expecting that everyone will eventually transition to this solution is incorrect. There are pitfalls.

Stagnation

When we create a derivative asset that represents bandwidth to be yield-farmed, the stakeholders unilaterally control the network. This is not the case on Bitcoin (stakeholders have very little say on BTC, which is good and by design to prevent a hostile takeover).

Say someone builds an app on DPOS that is sub-par and isn't nearly as good as other apps on the network. This doesn't matter if the founders of that app have enough stake to force the network to give them bandwidth to operate. The sub-par app will continue to dominate the network because that's where the stake is being allocated. Other apps that are clearly better will be choked out because they didn't get in early enough.

Comparing this to a network with on-chain fees like Ethereum, we see that this is no longer an issue. Every app must constantly be fighting for its life in the jungle. There are no subsidies; There are no guarantees. If today your app is good and worth paying the on-chain fee: great. If it completely breaks down at the seams during a bull market spike: you better figure your shit out and streamline it so it doesn't use so much bandwidth.

Conclusion

The bottom line here is that networks with on-chain fees are going to prove themselves to be far more competitive over time without having the option of getting soft and lazy like DPOS apps on a chain that guarantees them bandwidth for 'free'... forever. Users on DPOS chains can not seem to envision an environment where the derivative asset that represents bandwidth would ever have value, which is comical because any level of significant adoption guarantees the bandwidth to have value. It's going to catch many by surprise when it happens. Not I. I will be ready for the inevitable rent-seeking bandwidth market.

Yield-farmed derivative bandwidth tokens are largely underappreciated by those outside the DPOS system, while overappreciated by those within it. We love to advertise our chain as one that has zero transaction fees, but that simply is not the case. Any network that actually offered free service would immediately be Sybil attacked and destroyed due to the systemic failure of providing free service on an open network. There is no getting around this fact: WEB3 isn't WEB2. Get over it.

Far too many people out there think that WEB3 needs to offer free service to compete with WEB2. This again is incorrect. WEB3 is defined by paying for service. The difference between WEB3 and WEB1 (subscription model) is that WEB3 actually pays users to engage with the network in productive ways using proper monetary incentives. Even though it costs money to use the system, one can easily make a lot more money just by trying to be a productive member of crypto society. In this sense, the user becomes an employee of the network, and that's a great thing. We just need to understand the bumps in the road we are about to drive over before they knock us out of the cart.

So much negativity :o)

I wish I had access to hiveblocks.com, because it has many annoying shortcomings that should be easy to correct making it so much more useful tool. It would certainly be easier than writing entirely new block explorer.

Obviously we do know the sizes of blocks, and if you run your own node, you have that in "Block stats" in log (I've discussed the need to be able to extract similar info from block log, but that's something that has understandably very low priority).

It is more like 50%. We also at times have blocks that are full, mostly during Splinterlands related events, but the increased traffic is not sustained. For the sake of some estimations below let's assume it is 1/3.

Yes we can (to an extend). Obviously we'd need to do it gradually and observe the effects. I wish witnesses doubled block size already, so we could see how it behaves on actual traffic in rare events when blocks are now full (there would be the same amount of data, just packed in less bigger blocks followed by regular small ones - there is no actual demand for bigger blocks all the time). Consensus code was stress tested and we know we can handle even 2MB blocks. The special "executionally dense" transactions that were a threat before HF26, only mitigated by small blocks, were optimized away, so they are no longer a problem. It is actually hard to set up environment for stress test that can saturate 2MB blocks, but you can make estimations yourself based on the information the node puts in log. On my mid-range desktop computer during sync (where every check is made, just there is no waiting between blocks) the blocks are processed in 1.2-1.7 ms per block. Let's assume 2ms. With above assumption that blocks are only 1/3 full, full block sync would take on average 6ms. When witness node produces block, it has to process every transaction separately. With most of other code optimized, now dealing with undo sessions can take even 80% of the processing time (something that is on the radar). So we multiply by 5. Therefore production of average full 64kB block takes 30ms. To make it full 2MB block, we need to multiply again by 32. We are still under one second. I'm confident we can still cut it in half, but there is no need for that at the moment (we could f.e. process signatures on GPU for extra parallelism).

Similar with memory consumption. Out of 24GB recommended size of state, 8GB is still free, 4GB is fragmentation waste, finally more than 11GB does not really need to reside in memory all the time and is therefore a target of relatively easy optimizations (by that I mean we know what needs to be done and that it can be done, not that it is a short task :o) ). Once those optimizations are in place we could grow to 50 million users and still be able to run consensus node on regular computer.

I agree with one aspect. It is near impossible to stress test full stack of important second layer services, because you'd need a whole bunch of spare servers. And I'm sure they would not be able to handle load of full 2MB blocks - we'd need much better ones. They are not in the realm of "not yet available on the market" though, they just cost a lot. While problem of block log can be somewhat mitigated by pruning and sharing the same storage between nodes, there is no helping in case of stuff put into databases. We are talking about even 150 million transactions per day and 56GB of new blockchain data (20TB per year). That is not sustainable, at least not in context of decentralized network (I think it is important for semi-normal people to be able to run Hive related services even if they don't receive producer rewards). And I think it is not sustainable in the fundamental way, to the point where we should split transactions between those that have to be kept forever and others (majority) that is only kept for limited time (1-3 months).

I'd need to invite @gtg to talk about what can cause some servers to fail at times, however what happens later is partially related to decentralization. There is no central balancer to distribute traffic in reasonable way. When popular API node fails, its traffic is often redirected to other popular API node, which has to end in trouble. No one is going to keep servers so oversized that they are mostly idle all the time only to be able to handle occasional heavy load.

When adoption increases, so does the price of Hive and therefore producer rewards. They will have both need (increased traffic) and mean (increased rewards) to scale up the hardware. I also hope increased RC costs due to traffic would put some sanity into the minds of app devs. The same data could be stored way more efficiently in binary form instead of bloated custom_jsons. I've also recently learned about node-health service, that just sends "meaningless" transactions to those nodes in order to see if they pass to blockchain. I can understand the reasons why someone would do this, but it is only possible because RC is dirt cheap (one of the reasons I was kind of opposed to RC delegations and why I have not yet started experiments with "RC pool" solution similar to "contract pays" you've mentioned, even though it would be very useful). So, we have use bloat that can be reduced when incentives arrive, we can scale up through better hardware in the short term and some more optimizations in the longer timeframe. Once better hardware becomes a norm we can increase size of RC pools to reflect that, pushing RC price back to affordable levels.

Websites going down isn't a critical Hive infrastructure, usually it's related to API nodes having troubles

which isn't best way to do, because it should be solved at client side (i.e. by changing API node that client is using)

Well, from user perspective it might actually look like it is, since they are cut out of interaction with the blockchain when that happens.

My reply got rather lengthy so I just wrote a whole blog post here instead: https://peakd.com/koinos/@justinw/koinos-and-hive-what-s-the-difference-and-why

tl;dr: while I agree with some of this, much of it is based on incorrect assumptions (and Koinos is not DPOS). I appreciate constructive feedback on anything though so kudos to you for thinking and writing.

Luckily the consensus algo of Koinos has nothing to do with this post.

Thanks for the lengthy reply I guess I'll read that now.

Comment posts are always hilarious, eh?

Indeed. True Hive style LOL

how can you make all this research and get the first thing wrong about KOINOS: its consensus is proof of burn and not DPoS...

For the rest, I'll have to reread it

Because I didn't do that much research and the consensus algorithm for Koinos is fully beyond the scope of what I'm talking about here. To be fair I did know that Koinos CLAIMS to be "proof-of-burn". You can see how this is an easy mistake to make:

First of all, what do you need to burn tokens? Correct, you need STAKE to burn tokens. It is not a stretch to be like actually this is just a slightly different flavor of proof-of-stake or DPOS. As we can see they are already quite liberal with the claims they make in the whitepaper.

I'm searching the whitepaper more for information on proof-of-burn, and you know what I find? Well, actually, it's what I'm not finding. Why would anyone burn stake to mint a block? What's the financial incentive to do that? Can't find it... do you know what it is? I'd love to know before I can continue this assessment further.

So there is a block reward

This counts as financial incentive... but again I see very little in terms of how to stop bad actors or Sybil attack. Why wouldn't block producers be burning the exact amount of Koin they get in return from the block reward? How is this any different from letting just anyone mint a block?

You see even after reading the whitepaper and focusing on consensus it still makes very little sense. The key components are missing:

If anyone knows the answers let me know.

Until then I have to assume that I'm missing something.

financial incentive is coming from the 2% inflation. If 50% of Koin is burned this equates to a 4% APR. I expect it to be rather between 5-6% since not 50% will always be burned.

I suppose the idea is to have as many burn pools and individual nodes which would mitigate several bad actors with large "stake" (i.e. Koin which they can burn)

Wrote a response about Sybil attacks on my post: https://peakd.com/koinos/@justinw/rpb9qm

tl;dr it's very similar to Bitcoin

Proof of Burn is a mix between PoW and PoS, but more inclined to PoS. I tend to think that PoB is like PoS where you are continuously unstaking.

It is like Proof of Stake because:

It is like Proof of Work because:

Don’t throw me in that briar patch!

In a way, aren’t the now-clunky onboarding systems a safety valve? Since, right now, it would be impossible for five hundred million new accounts to be created in a short period of time. So even if Hive suddenly became really well known and a bazillion people wanted to FOMO in, wouldn’t Hive’s devs be able to plan at least a bit ahead for stress testing before a Resource Credit crunch hit the fan?

If we get even a million daily users it's going to be an issue.

Pretty sure.

And any frontend can just onboard with an email and password if they feel like it, storing the keys themselves and giving themselves permissions. Lite accounts already do this by design.

It also depends on the app.

If users are posting app commands directly on chain it could get crazy very fast.

Just like how Splinterlands gave us the crunch however many months ago that was.

Well that would be 100x or so from where we are now. 😎

But, yeah, accounts and active accounts are very different things.

I took your advice a while back and started collecting tokens. I'm nowhere in your league but I did just cross 200. I agree, nothing is ever "free". There is a cost to be paid somewhere. While I don't think we're anywhere near the point it will become an issue, you just never know. If Leothreads goes viral...? All bets could be off in a hurry.

Posted Using LeoFinance Beta

Koin could be what hive wants to be ( i don't talk about all social-related things).

A backend for transactions and no politics. The system of hive is revolutionary in terms of transaction handling. But it stuck.

And politics on Social layer make everything worse :D

I like the idea of the neutral carrier of information. I think Koin has a chance to build something like this.

Sure but they still have to actually built it and the guys over there act like it's already built. Classic crypto peeps counting their chickens before they hatch. Eggs so expensive, bro!

Thats true :)

The rewards earned on this comment will go directly to the people( @shiftrox ) sharing the post on Twitter as long as they are registered with @poshtoken. Sign up at https://hiveposh.com.