<p dir="auto">Table of contents:

<ul>

<li>HAF node for production

<li><ul>

<li>ZFS notes

<li><ul>

<li>Requirements

<li><ul>

<li>Docker installation

<li><ul>

<li>Build and replay

<li>HAF node for development

<li><ul>

<li>Requirements

<li><ul>

<li>Build and replay

<hr />

<h3>HAF for production

<p dir="auto">It is highly recommended to setup ZFS compression with LZ4. It doesn't affect the performance but it reduces the storage needs by 50%.

<p dir="auto">It might seem complicated but it is very easy to setup ZFS. There are plenty of guides out there on how to do so. I'll just provide some notes.

<h3>ZFS notes

<p dir="auto">When setting up on hetzner servers for example, I enable RAID 0 and allocate 50-100GB to <code>/ and leave the rest un-allocated during the server setup. Then I create the partitions after the boot on each disk on the remaining space using fdisk. And I use those partitions for ZFS.

<p dir="auto">I also use the configs from <a href="https://bun.uptrace.dev/postgres/tuning-zfs-aws-ebs.html#zfs-config" target="_blank" rel="noreferrer noopener" title="This link will take you away from hive.blog" class="external_link">here under "ZFS config".

<pre><code>zpool create -o autoexpand=on pg /dev/nvme0n1p4 /dev/nvme1n1p4

zfs set recordsize=128k pg

# enable lz4 compression

zfs set compression=lz4 pg

# disable access time updates

zfs set atime=off pg

# enable improved extended attributes

zfs set xattr=sa pg

# reduce amount of metadata (may improve random writes)

zfs set redundant_metadata=most pg

<p dir="auto">You want to change the ARC size depending on your RAM. It is 50% of your RAM by default which is fine for a machine with +64 GB of RAM but for lower RAM you must change the ARC size.

<p dir="auto">25 GB of RAM is used for shared_memory and you will need 8-16 GB of free RAM for hived + PostgreSQL and general OS depending on your use case. The rest is left for ZFS ARC size.

<p dir="auto">To see the current ARC size run <code>cat /proc/spl/kstat/zfs/arcstats | grep c_max

<p dir="auto">1 GB is 1073741824<br />

So to set it 50 GB you have to 50 * 1073741824 = 53687091200

<pre><code># set ARC size to 50 GB

echo 53687091200 >> /sys/module/zfs/parameters/zfs_arc_max

<hr />

<h3>Requirements (production)

<p dir="auto">Storage: 2.5TB (compressed LZ4) or +5TB (uncompressed) - Increasing over time<br />

RAM: You might make it work with +32GB - Recommended +64GB<br />

OS: Ubuntu 22

<p dir="auto">If you don't care about it reducing the lifespan of your NVMe/SSD or potentially taking +1 week on HDD to sync, you can put shared_memory on disk. I don't recommend this at all but if you insist, you can get away with less RAM.

<p dir="auto">It is also recommended to allocate the RAM to ZFS ARC size instead of PostgreSQL cache.

<p dir="auto">Also it is worth going with NVMe for storage. You can get away with 2TB ZFS for only HAF but it is 2.5TB to be safe for at least a while.

<hr />

<h3>Setting up Docker

<p dir="auto">Installing

<pre><code>curl -fsSL https://get.docker.com -o get-docker.sh

sudo sh get-docker.sh

<p dir="auto">Add your user to docker group (to run docker non-root - safety)

<pre><code>addgroup USERNAME docker

<p dir="auto">You must re-login after this.

<p dir="auto">Changing logging driver to prevent storage filling:<br />

<code>/etc/docker/daemon.json

<pre><code>{

"log-driver": "local"

}

<p dir="auto">Restart docker

<pre><code>systemctl restart docker

<p dir="auto">You can check the logging driver by

<pre><code>docker info --format '{{.LoggingDriver}}'

<hr />

<h3>Running HAF (production)

<p dir="auto">Installing the requirements

<pre><code>sudo apt update

sudo apt install git wget

<p dir="auto">/pg is my ZFS pool

<pre><code>cd /pg

git clone https://gitlab.syncad.com/hive/haf

cd haf

git checkout v1.27.4.0

git submodule update --init --recursive

<p dir="auto">We run the build and run commands from a different folder

<pre><code>mkdir -p /pg/workdir

cd /pg/workdir

<p dir="auto">Build

<pre><code>../haf/scripts/ci-helpers/build_instance.sh v1.27.4.0 ../haf/ registry.gitlab.syncad.com/hive/haf/

<p dir="auto">Make sure /dev/shm has at least 25GB allocated. You can allocate RAM space to /dev/shm by

<pre><code>sudo mount -o remount,size=25G /dev/shm

<p dir="auto">Run the following command to generate the config.ini file:

<pre><code>../haf/scripts/run_hived_img.sh registry.gitlab.syncad.com/hive/haf/instance:instance-v1.27.4.0 --name=haf-instance --data-dir=$(pwd)/haf-datadir --dump-config

<p dir="auto">Then you can edit <code>/pg/workdir/haf-datadir/config.ini and add/replace the following plugins as you see fit:

<pre><code>plugin = witness account_by_key account_by_key_api wallet_bridge_api

plugin = database_api condenser_api rc rc_api transaction_status transaction_status_api

plugin = block_api network_broadcast_api

plugin = market_history market_history_api

<p dir="auto">You can add the plugins later and restart the node. The only plugin you can't do that is <code>market_history and <code>market_history_api. If you add them later, you have to replay your node again.

<p dir="auto">Now you have 2 options. You can either download an existing block_log and replay the node or sync from p2p. Replay is usually faster and takes less than a day (maybe 20h). Follow one of the following.

<p dir="auto"><strong>- Replaying<br /><span>

Download block_log provided by <a href="/@gtg">@gtg - You can run it inside tmux or screen

<pre><code>cd /pg/workdir

mkdir -p haf-datadir/blockchain

cd haf-datadir/blockchain

wget https://gtg.openhive.network/get/blockchain/block_log

<p dir="auto">You might need to change the permissions of new files/folders (execute before running haf)

<pre><code>sudo chmod -R 777 /pg/workdir

<p dir="auto">Run & replay

<pre><code>cd /pg/workdir

../haf/scripts/run_hived_img.sh registry.gitlab.syncad.com/hive/haf/instance:instance-v1.27.4.0 --name=haf-instance --data-dir=$(pwd)/haf-datadir --shared-file-dir=/dev/shm --replay --detach

<p dir="auto"><strong>Note: You can use the same replay command after stopping the node to continue the replay from where it was left.

<p dir="auto"><strong>- P2P sync<br />

Or you can just start haf and it will sync from p2p - I would assume it takes 1-3 days (never tested myself)

<pre><code>cd /pg/workdir

../haf/scripts/run_hived_img.sh registry.gitlab.syncad.com/hive/haf/instance:instance-v1.27.4.0 --name=haf-instance --data-dir=$(pwd)/haf-datadir --shared-file-dir=/dev/shm --detach

<p dir="auto">Check the logs:

<pre><code>docker logs haf-instance -f --tail 50

<p dir="auto"><strong>Note: You can use the same p2p start command after stopping the node to continue the replay from where it was left.

<hr />

<h3>HAF for development

<p dir="auto">This setup takes around 10-20 minutes and is very useful for development and testing. I usually have this on my local machine.

<p dir="auto">Requirements:<br />

Storage: 10GB<br />

RAM: +8GB (8GB might need swap/zram for building)<br />

OS: Ubuntu 22

<p dir="auto">The process is the same as the production till building. I'm going to paste them here. Docker installation is the same as above.

<p dir="auto">I'm using /pg here but you can change it to whatever folder you have.

<pre><code>sudo apt update

sudo apt install git wget

cd /pg

git clone https://gitlab.syncad.com/hive/haf

cd haf

# develop branch is recommended for development

# git checkout develop

git checkout v1.27.4.0

git submodule update --init --recursive

mkdir -p /pg/workdir

cd /pg/workdir

../haf/scripts/ci-helpers/build_instance.sh v1.27.4.0 ../haf/ registry.gitlab.syncad.com/hive/haf/

<p dir="auto"><span>Now we get the 5 million block_log provided by <a href="/@gtg">@gtg

<pre><code>cd /pg/workdir

mkdir -p haf-datadir/blockchain

cd haf-datadir/blockchain

wget https://gtg.openhive.network/get/blockchain/block_log.5M

<p dir="auto">rename it

<pre><code>mv block_log.5M block_log

<p dir="auto">Replay will have an extra option

<pre><code>cd /pg/workdir

../haf/scripts/run_hived_img.sh registry.gitlab.syncad.com/hive/haf/instance:instance-v1.27.4.0 --name=haf-instance --data-dir=$(pwd)/haf-datadir --shared-file-dir=/dev/shm --stop-replay-at-block=5000000 --replay --detach

<p dir="auto">Check the logs:

<pre><code>docker logs haf-instance -f --tail 50

<p dir="auto">HAF node will stop replaying at block 5 million. Use the same replay command ☝️ for starting the node if you stop it.

<hr />

<h3>General notes:

<ul>

<li>You don't need to add any plugins to hived. Docker files will take care of them.

<li>To find the local IP address of the docker container you can run <code>docker inspect -f '{{range .NetworkSettings.Networks}}{{.IPAddress}}{{end}}' haf-instance which is <code>172.17.0.2 for the first container usually.

<li>To see what ports are exported run <code>docker ps

<li>To stop the node you can run <code>docker stop haf-instance

<li>For replaying from scratch you have to remove shared_memory from <code>/dev/shm and also remove <code>/pg/workdir/haf-datadir/haf_db_store directory

<li>You can override PostgreSQL options by adding them in <code>/pg/workdir/haf-datadir/haf_postgresql_conf.d/custom_postgres.conf

<hr />

<p dir="auto">The official GitLab repository includes more information. See /doc.<br /><span>

<a href="https://gitlab.syncad.com/hive/haf" target="_blank" rel="noreferrer noopener" title="This link will take you away from hive.blog" class="external_link">https://gitlab.syncad.com/hive/haf

<hr />

<p dir="auto"><del>I'm preparing another guide for hivemind, account history and jussi. Hopefully will be published by the time your HAF node is ready.

<p dir="auto">Update: <a href="/hive-139531/@mahdiyari/running-hivemind-and-hafah-on-haf--jussi-2023">Running Hivemind & HAfAH on HAF + Jussi

<p dir="auto">Feel free to ask anything.

<hr />

<p dir="auto"><center><img src="https://images.hive.blog/768x0/https://files.peakd.com/file/peakd-hive/mahdiyari/23uFRp7fcDNZqL5g3Rn5i5wJXRHCqJthDWV6sEe2e5j9T2k8yKy4vUK3tw7WthfYJDvcs.jpg" alt="cat-pixabay" srcset="https://images.hive.blog/768x0/https://files.peakd.com/file/peakd-hive/mahdiyari/23uFRp7fcDNZqL5g3Rn5i5wJXRHCqJthDWV6sEe2e5j9T2k8yKy4vUK3tw7WthfYJDvcs.jpg 1x, https://images.hive.blog/1536x0/https://files.peakd.com/file/peakd-hive/mahdiyari/23uFRp7fcDNZqL5g3Rn5i5wJXRHCqJthDWV6sEe2e5j9T2k8yKy4vUK3tw7WthfYJDvcs.jpg 2x" />

On a Ryzen 7950X machine with 128 GB of ram, done it in just about 24 hours(either slightly under or over, don't recall which). This was about 1.5 months ago so should be basically the same now.

Is there any incentive at the moment to run a HAF node?@mahdiyari Thanks for the guide 💪

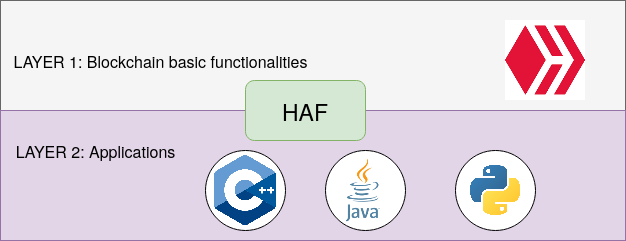

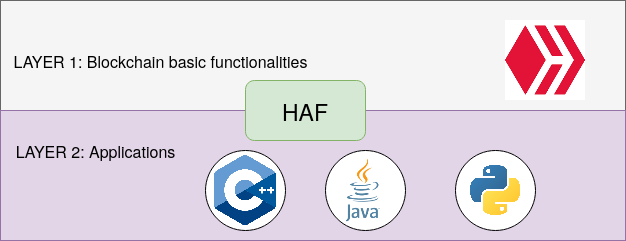

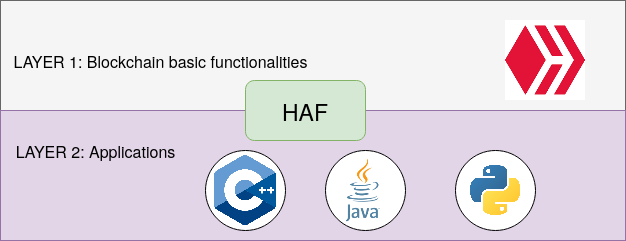

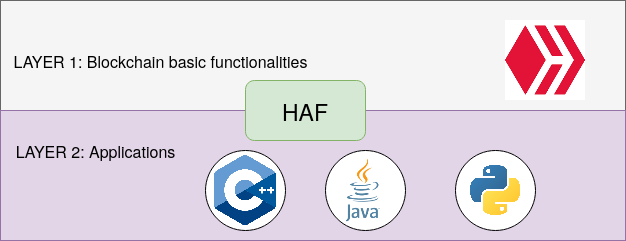

A lot of API nodes currently run HAF. After the next release, I expect all of them will (because new hivemind features are only being added to HAF-based version of hivemind).

Other than general purpose API node operators, anyone who builds a HAF app will need to run a HAF server (because their app will run on it) or else they will need to convince someone else who has a HAF server to run it for them.

We're also building several new HAF apps that will probably encourage more people to want to run a HAF server.

Cannot wait for these apps to be ready.

$WINE

Wow!!! You developers are just so cool!

I would highly recommend for anyone wanting to start a multiplexer session for a HAF node to use a .service file to run it in the background. This will simplify the process of starting and stopping services without having to jump into different sessions repeatedly.

This has been a core thing that I have used for my own unity games to run with mongodb.

Also Tmux is more customizable than screen, but each to their own.

Congratulations @mahdiyari! Your post has been a top performer on the Hive blockchain and you have been rewarded with this rare badge

<table><tr><td><img src="https://images.hive.blog/60x60/http://hivebuzz.me/badges/toppayoutday.png" /><td>Post with the highest payout of the day. <p dir="auto"><sub><em>You can view your badges on <a href="https://hivebuzz.me/@mahdiyari" target="_blank" rel="noreferrer noopener" title="This link will take you away from hive.blog" class="external_link">your board and compare yourself to others in the <a href="https://hivebuzz.me/ranking" target="_blank" rel="noreferrer noopener" title="This link will take you away from hive.blog" class="external_link">Ranking<br /> <sub><em>If you no longer want to receive notifications, reply to this comment with the word <code>STOP <p dir="auto"><strong>Check out our last posts: <table><tr><td><a href="/hivebuzz/@hivebuzz/recovery"><img src="https://images.hive.blog/64x128/https://files.peakd.com/file/peakd-hive/hivebuzz/23uFKTNcnw2EPu4zmyKv64xU3B44awk3KsJtwgZ6amzVpuoeneaMkE4dWHZCNnem6c2Y1.png" /><td><a href="/hivebuzz/@hivebuzz/recovery">Rebuilding HiveBuzz: The Challenges Towards Recovery