The tables for expected CPU magnitude where quite popular. It’s been a busy couple of days of improving both the quality of the script and the methodology. Mining the host data files for information about GPU performance is a bit trickier than in the case of CPUs. Machines with multiple GPUs need to be excluded because we can’t be sure if all the GPUs are running on the same project.

The script now reports a relative error to go with each magnitude value. I doubt that the exact amount of error is of interest to many people but I am now using a cutoff of +/- 35% for removing the data form the table entirely. The larger projects (Seti, Einstein, GPU Grid) have very accurate results (less than +/-10%) for Nvidia GPUs.

MooWrapper is excluded because I couldn’t find their /stats/hosts file.

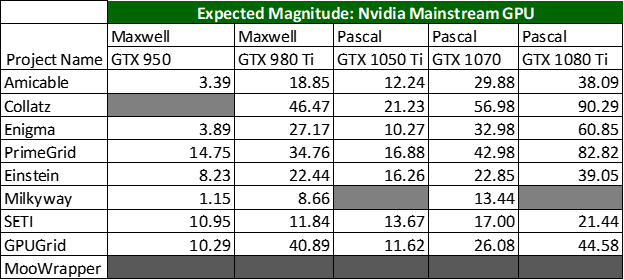

Results: Nvidia

I considered including Kepler GPUs in this chart since they were the last generation of Nvidia GPU where FP64 compute wasn’t considered an enterprise/datacenter feature. Unfortunately, it became apparent that it was still better to run PrimeGrid on these cards. Additionally there were no models for which there was enough data to fill in the less popular projects.

Some things to keep in mind if you are using Nvidia GPUs:

- Run the PPS-sieve subproject on PrimeGrid to maximize your Rac. It makes a huge difference

- PrimeGrid and Collatz are consistently the best choice to maximize rewards with a few exceptions

- If you’re OS is Linux you can’t run CUDA jobs on Enigma

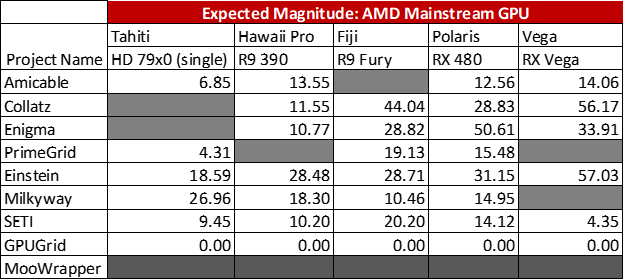

Results: AMD

Separating out different versions of AMD GPUs is incredibly difficult from the name stored in the card’s BIOS. In the end I had to combine all the Tahiti 7900 series GPUs into a single category. However this excludes the HD 7990, which is a dual GPU card (2x HD 7970). The values given are probably close to that of a 7970. There was a similar issue that resulted in the combination of R9 Fury/R9 Nano/R9 Fury X into a single entry as well as the RX Vega 56/64 into a single entry.

Some things to keep in mind if you are using AMD GPUs:

- GPUGrid is an Nvidia only project

- Run Milkyway if you have a GPU that can do FP64 (Tahiti)

What’s Next?

Some of the hardware running BOINC projects can be pretty ridiculous. (Seriously, can I borrow that Tesla V100?)

Next up I am going to start trying to put together tables for a mixture of HEDT and server CPUs. Then I will try to make some tables for workstation and server GPUs if possible. This data may or may not be pulled from the host files and will probably be reported in terms of maximum RAC to allow for easier conversion to Mag in the future.

- Do you have an extreme edition CPU, Threadripper, Xeon, Opteron, Epyc that no one else is running?

- Do you have Titan, Quadro, Tesla, FirePro, Instinct GPU?

Send me a message with your steady state RAC (or credits/day) for as many projects as you can to help fill in the high end tables.

Thanks to jayrik88 on Reddit: The data you provided helped me figure out how to handle the 7900 series issue, also the configuration of your machine helped me find a bug in the script.

I run a threadripper 1950X and am doing a little experiment see here and here. I try to run 12 (once were 14) projects and compare their output (here on gridconstats.eu).

I would like to get the CPU-time and Credit I receive per task out of the files provided by the projects to compare the projects but I have not found out how I could do that. Do you have any idea?

If you may be interested in some factors that influence the output short-term, then my second link might provide some information.

Ok, I looked into this a bit. There is no way to get <project_url>/results.php to return an xml file, even though a request was made to the BOINC developers several years ago.

I think that I can work up a bash script to output the results.php data for a specific host to a text file. I should have something in a day or two.

Sounds great! I am used to processing data that way. Unfortunately, have not learned any way of getting there yet.

But as soon as it is in a table, it is a pleasure to make nice plots out of it :)

Perhaps I can help fill some gaps. My 7990 on Enigma does 430 000 RAC per core for a magnitude of around 29. It might not be 100% RAC yet but it's very close if it isnt.

Thanks!

Any chance you would be willing to run on Collatz for a day or two and return your daily credit?

Waiting for my 1070Ti, I'll bookmark this ! Thanks for the work !

I want to upgrade to a Vega anyway, seeing how it performs in Einstein is another great point

Table shows for most GTX cards higher magnitude for einstein@home than amicable numbers. In many comments users say einstein@home is more competitive and will yield lower magnitude. This is what I observe. I run both on one GTX 1060 (50% each). Amicable has ~ 20% higher magnitude on my card. Total for both projects is ~ 11, but I guess card is working on these projects only around 16h / day.

Moo host stats: http://moowrap.net/stats/host.gz @barton26 helped me find it.

Do you mind if I translate your post in french? I'll give you credit

Feel free to do so, just pass on any hardware performance information that you get.

Thanks for the info! I just swapped from Milkyway to PrimeGrid because my video card is lower than the lowest nVidia you have listed. Hoping for a return on my grinding now!

dutch

I will 10000000% say I needed this for a reference for TODAY and am going to use it , I just want to see a real time trending tool and am willing to host the website and domain for many projects related to this. I myself , knowing people like you whom join about every 3-4 months and or every 3-4 months a new one post somewhere a chart and its related to the current 3 and last of last series ( really my 750ti turns more on AM than your 970 and on collatz it gets 1 1/10th ) and I doubt you even mess with plan_class and/or video card kernel settings and application specifics? Easy to google , happy to share my webshare with examples for moo to run 4 tasks on a 10xx series and not effect each other , I ran 2 x collatz for a while same deal. The projects application matters too , what extensions it/they use same as CPU and why some xeons are better than others and hell thank god GPU you dont have to look at L2 and L3 cache rates ( yes bus bandwidth and speed ) but so many factors... I would love to have the greylist website able to help give some more " Team help needed here " than " where can you give 1 project less help than its science needs than another and make more gridcoin " because then you will find out that the current number of Gridcoin Team members ( vs project users total ) and current hardware online along with the number of hosts does not matter 1 bit and that its all about timing... Some of us are here for science , some for just making a buck and from 1.5yr in Gridcoin along with being sent to /dev/null on irc.freenode.org interestingly directly after exposing network oversight and network file ( source code ) area with questionable or possible future 0day vuln network security and Gridcoin network oversight and since there is no real " Gridcoin Committee " or " Gridcoin Foundation " there are not always the right " Checks and Balances " because everybody is so focused on how much $$$$ their GPU can make... I will tell you , not much... Not a 10xx series , not one the current R9's or what ever. The last batch of cards were rushed out thanks to Gridcoin and seti@home getting news exposure along with BTC and XMAS and gamers ( kids do get mad with lag ) so it will be nice to see the 2nd quarter BOINC cards , but we need dev's to work with projects in order to utilize GPU extensions and full on capabilities... This 100% throws a wrench in your sprocket , sorry but maybe gets you on the right track. Maybe getting a new boinc-server code update request for a set ### for update stats vs project specific ( but eh linux we do what we want ) as some projects 1 time a day others 4 times a day others every hour and even some update every 10/15... So please tell me how to figure out a real chart and lets get the real numbers... Hell if it being non-static and non-linear and linear and always floating I have the resources to dev if not host the future live production site... This would/could help Gridxit Round #2 ( no dont want to merge Team Gridcoin and SETI|koa dutch to be clear ) to drop the team Gridcoin requirement and everyone could earn GRC along with show users where projects may need more CPU @ this GPU @ this because not everything is on 24/7 out there... Not all of us pay $250-500mo power for racks and in-home combined to do this , I am just a scientist. I just cannot code , hope i sparked your skills.interesting since these both are linear and non-linear and the numbers are static so really just making a chart with N for the result for MAG under each project AND each GPU series would actually be more correct and you could inspire ( or I just did!! ) a BOINC project to compute and hypothesize the possible outcome at that 1 month/day/year/hour/minute/second using a snapshot of only that moment. Being non-linear , each project updates stats on different intervals so actually its not really every superblock on Gridcoin's end that factually changes your MAG it depends on each projects Administrators choice at #1 update-stats settings and yes #2 the superblock time/date/etc I am just trying to show a flaw give N so you can then figure out N on your own... I cannot do all the work and only have the skills to compute half , I hope you can figure N on your own or we can work together. Let's take Amicable numbers for example , you can see my harddware / rac / full credit @ https://gridcoinstats.eu/cpid/1ac793c529cd6ffa942afaef7c5068c2 dats meh and since focus is on the GPU look at AM since dd@home is cpu only and i haven't crunched in 3 weeks , I moved to ODLK since that is what the historical and recorded application is/was for not ODLK1 because it did not exist ) and I run a 2gb GTX750ti and then for 20% of the 24hr cycle a 2gb 730gt actually I keep this video card paused so I can do meteorological things and radar display takes priority ) and then take the time to " look back @ my historical stats " and wait , did I say ignore DD@home but not crunch them for 4 weeks now? My mag was only 80 , DD@home actually used to have a GPU application and I am sure will again and SONM? SOMN? What ever Russian Boinc for Hire Federation thingy group that BOMBED... So now the SB side of the N x N / n = N predictable snapshots and why , so both these projects are updating their internal stats at different intervals this MAG for 1 project is dropping slow as a @snail and 1 is dropping at a high rate ie: Amicable Numbers... Now the other factor I have not mentioned , that days or that intervals team Gridcion users vs All users pie and your hardwares slice of it is based upon the completed WU in that period , that factor changes every hour as machines go on , off , get built new , meltdown so its fluid or spectre. I love that the new people are contributing to figuring out stating and statistic graphing and charting algorithms , we need them.. We as in me and you sir/ma'am are equal as any other its an open source project. I wish we would make a real time chart that monitors all the WL projects ( the Graylist when I get it worked in , ie: after g_uk screws up with his rush rush rush since he JUST got the idea and nobody has been working on the networking and scaling of a live project monitoring non-human whitelist system since they cant honor what projects votes are for and we waste our vote weight due to whales like since the needs of the one outweigh the needs of Community vs the current vote system and the GRC8 wants to create as many many votes to get their motives online. Anyways side rant , you got me @ 4:20 , so this is legit math... You are onto something , others have similar and ifoggs and barton26 along with others should all opensource and github these calculators but since they are static they still need the glue. The Gridcoin Grey ( since I am rounding 40 its E for my gotee hairs ) List as some projects are ran on and I am 100% serious and being honest and any Boinc project admins I encourage to drop specs both #1 hardware and #2 stat update intervals and I am happy to dig into the Boinc source code to help with N while I work on n ( GPU vs CPU ) project credit updates as if it was every minute it would take from other clock cycles needed elsewhere such as how ever and I have NOT looked into Boinc-Server or the docker Boinc images but they can be used in/on Linux ( proxmox rox sox ) and even with WSL https://github.com/RoliSoft/WSL-Distribution-Switcher and https://github.com/marius311/boinc-server-docker so you can yourself figure out variables going forward.