This is my first Post, I hope you guys like it.

The Deep Web

It is a dark, hermetic world, traditional search engines do not have access here, in Darknet users find everything that is illegal elsewhere, distribution of files that violate copyright, support for terrorist activities, pedophiles, drug trafficking, It deals with whites, hiring assassins, ultragore, publication of dangerous opinions for certain political regimes, publication of secret information about legal organizations (secret services or large corporations), publication of secret information about criminal organizations, among other things.

The term dark network, of the English Darknet, was coined in November of 2002 in the document "The Darknet and the Future of Content Distribution" written by four investigators of Microsoft: Peter Biddle, Paul England, Marcus Peinado and Bryan Willman. Users move in anonymity, unlike the internet, where each user leaves a trail, one of the most known paths to Darknet leads through the Tor network, the principle is simple, the user downloads a program to connect to a server Tor , then randomly choose other servers, from the outside it is impossible to follow, it is ideal for criminals but also for other social groups, here also there are search directories and social networks, it is used by the US Army and by political activists, the importance of Tor, was demonstrated in Egypt in 2011, in the Arab Spring thousands of young people gathered via Facebook, the Hosni Mubarak regime tried to prevent it, according to censorship the number of Tor users increased, but Tor has a weak point, On the way to the Tor server, the user is not anonymous.

Another way to get to Darknet is Freenet, any Freenet user frees up space on his hard drive, so his computer becomes part of the Darknet anonymous network, originally designed by Ian Clarke. The general idea behind Freenet is to provide people with a secure and uncensored system to publish and consume content, says Florent Daigniére, co-founder of Freenet. That makes Freenet popular, for example among users who share files, in many exchange packages illegal copies abound, the creators of Freenet have no problem with it, since their maximum objective is free communication in Internet. Censoring is to be judge and jury at the same time, I do not know any system, including democracy, that right to this, I reject any form of censorship, says Florent Daigniére, the current development of the Internet, validates its argumentation, search engines and social networks gather more and more data of its users without their authorization, dictatorships around the world try to control citizen communication by closing internet sites that make it uncomfortable, Tor and Freenet offer an alternative even if sometimes it makes possible abuses.

The deep internet is above all a virtual meeting place where illegal transactions are negotiated, database sales, false passports, where stolen things are obtained, from jewelery to works of art, but there are also frequent things that you do not want to see , snuff videos, shocking photos, mutilations, and high-caliber content, which in many countries is punishable by jail, that is the world of the deep internet, a place where not everyone can enter, first of all you should keep in mind that to navigate through these deep waters you must have good protection, because in this place there are all kinds of people lurking, hoping to find some marauder to attack, government agents, computer criminals many other people looking for a victim, so to navigate In the deep Internet, the first thing to do is change your identity, our IP address, the place where you live, trace a Computer is very easy, if you enter the right page, you can find the address of a person with great detail, so to walk in the deep internet you have to mask with the appropriate software, Real Hide IP for example, you should not download any type of content, you will never be sure of what you are downloading because here you cannot find the typical teenagers who want to infect your computer, here are professionals and people who could and people who could do you a lot of harm, another of the precautions to be taken when navigating the wild side of the internet is to disconnect or plug the webcam, so that nobody identifies us, not even by the face, the most famous place on the deep internet is a page called Silk Road, ie , the Silk Road, an ideal anonymous market for prohibited transactions that is even mentioned in TV series as Person Of Interest (2011) (season 2, chapter 9).

On October 2, 2013, it was closed by the FBI and its founder, known by his alias "Dread Pirate Roberts," was identified as Ross William Ulbricht. On November 6, 2013 the Forbes and Vice magazines indicated that the site had returned online, for years they have been intermediaries in all types of illegal transactions, from drugs to industrial secrets, yes, they have their limits, neither weapons nor Pedophilia, this market moves through a completely virtual and anonymous currency called Bitcoin, a decentralized cryptocurrency conceived in 2009 by Satoshi Nakamoto. The term also applies to the protocol and to the P2P network that supports it. Transactions in Bitcoin are made directly, without the need for an intermediary. Contrary to most currencies, Bitcoin is not backed

by no government nor does it depend on trust in any central issuer, but uses a work test system to prevent double spending and reach consensus among all the nodes that make up the network.

In the deep web, the data transfer anonymously works in the following way, the data is hosted on servers, in exactly the same way as in the superficial web, the difference is that the paths followed by that data are not predictable if are encrypted, therefore the software used directs the Internet traffic through a network of volunteers around the world and different servers that hide user information, avoiding any monitoring activity, as the authors of Tor in the official page, there are many reasons why Tor is slower than a normal connection, the first has to do with the design of the Tor network, the traffic is leaping from one node to another and the delays add up, according to the Tor network. get more help from volunteers and more money from sponsors, the amount and speed of the nodes will improve the overall speed of the service, but do not expect big speeds, it's the I have to pay for anonymity.

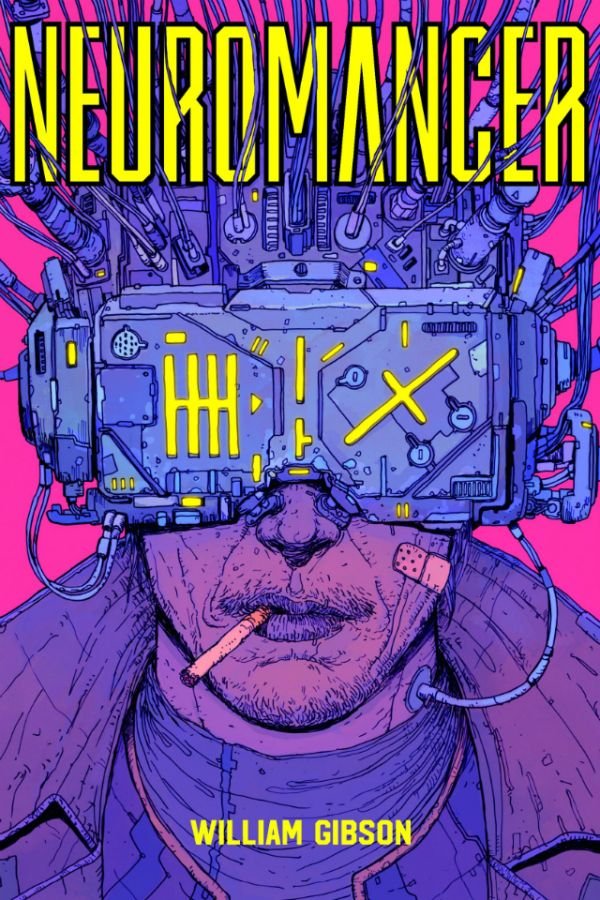

In the decade of the 80's, what nowadays is known as data paradise or data paradise, term coined by writer William Gibson in his 1986 novel "Count Zero" (second book of the Sprawl Trilogy) with Neuromancer and Mona Lisa Overdrive) this consists of a server or a network that houses data outside the state in two ways, encryption or cryptography (which consists of a procedure that uses an encryption algorithm with a certain key or encryption key). transforms a message, without addressing its linguistic structure or meaning, in such a way that it is incomprehensible or, at least, difficult to understand any person who does not have the secret key or decryption key of the algorithm) and the location of these clandestine networks in areas that are considered to be highly secure, for example, it could be a country that does not have laws regarding the restriction of the use of data or that have them poorly applied and in addition s that do not have extradition treaties, either in a centralized or territorial model.

Another way to create a data paradise is to have the same servers and computers of related individuals in anonymous P2P networks (decentralized or de-territorial model). An example of this is Freenet, since they are basically a decentralized censorship-resistant information distribution network, working by pooling the bandwidth and storage space of the computers that make up the network, allowing to its users to publish or recover different types of information anonymously.

The motivation for the creation of a data paradise in spite of being very diverse, converges on very specific reasons, in the nations where communications go through a censorship process, there is usually a group, office or official ministry occupied with that control. Even in cases where censorship is not institutionalized by the state, it is possible that different groups of people have different thoughts about what is or is not offensive, acceptable or dangerous. In the case of Freenet, they claim to be a network that eliminates the possibility of one group imposing its beliefs or values on others. In essence, no one should be allowed to decide what is acceptable to others. The tolerance of the values of each one is encouraged and in case of failing this the user is asked to make blind eyes to the content that is opposed to his own point of view.

The technical design of Freenet consists of an unstructured P2P network of non-hierarchical nodes that transmit messages and documents between them. Nodes can function as end nodes, from where document searches begin and are presented to the user, or as intermediate routing nodes.

Each node hosts documents associated with keys and a routing table that associates nodes with a history of their performance to acquire different keys.

To find a document in the known network a key that describes it, a user sends a message to a node requesting the document and providing it with the key. If the document is not found in the local database, the node selects a neighbor from its routing table that believes it will be able to locate the key more quickly and passes the request, remembering who sent the message to be able to undo later the way. The node to which the request was passed repeats the process until a node that stores the document associated with the key is found or the request passes through a maximum number of nodes, known as the value of time of life.

None of the intermediate nodes knows whether the previous node in the chain was the originator of the request or a simple router. When undoing the path, none of the nodes can know if the next node is the one that actually had the document or was another router. In this way, the anonymity of both the user who made the request and the user who answered it is ensured.

When the document corresponding to the searched key is found, a response is sent to the originator of the request through all the intermediate nodes that ran through the search message. Intermediate nodes can choose to keep a temporary copy of the document on the way. In addition to saving time and bandwidth in future requests for the same document, this copy helps prevent censorship of the document, since there is no "source node", and makes it difficult to guess which user originally published the document.

Essentially the same trail tracking process is used to insert a document into the network: a request is sent for a nonexistent file and once the document fails it is sent by the same route that the request followed. This ensures that the documents are inserted into the network in the same place where the requests will look for it. If the initial request does not fail, then the document already exists and the insert "collides".

Initially, no node has information about the performance of the other nodes it knows. This means that the initial routing of requests is random and that the newly created Freenet networks distribute the information randomly between their nodes.

Fproxy is the Freenet web interface that can be accessed through the use of any web browser. From here you can access Freesites (They are the equivalent of a website on Freenet and can be viewed with any web browser), manage our connections with other nodes, some statistics on the operation of the node, review the list of downloads and shipments, as well as, see and modify the configuration of our node.

The standard port of Fproxy is 8888 which can be accessed only from our computer by pointing our browser to http://127.0.0.1:8888/ or http: // localhost: 8888 /. If we want other people in our local network to have access to our node (either to see Freesites or to administer it), we must change the "IP address to bind to" and "Allowed Hosts" fields in the configuration page.

To discover content on the Web, search engines use web crawlers that follow hyperlinks through virtual port numbers of known protocols. This technique is ideal for discovering resources in the surface network, but is often inefficient in the search for deep web resources. These crawlers do not try to find the dynamic pages that are the result of the database queries due to the indeterminate number of queries that are possible. It has been observed that this can be (partially) overcome by providing links to query as a result, but this could unintentionally inflate the popularity of a deep Web member.