This proposal is a request for funding to pay for the work done so far and in the near future for development of the image server cluster that provides images (pictures) to hive.blog, peak.com, and other Hive frontends.

Explanation of costs

In addition to the development work, this proposal includes the charges for servers to host the images for three months (until this proposal expires).

After this proposal expires, we’ll make a separate proposal at a much lower rate that just covers the costs of the servers and server maintenance over a longer period of time.

It should be noted that most of the cost of this proposal is for the initial development work and one-time computer purchases (for backend fileservers). Roughly speaking the costs are: $50K in labor, $10.5K in new computer equipment purchases, and $2.5K in hosting charges (a lot of that is network bandwidth-related over a 3 month period, previous month + next 2 months) for a total of $63K.

For simplicity, we’re making a 63 day proposal, that pays out 1K HBD per day.

Goals of Image Server cluster design

Our immediate goal was to get a replacement for the Steemit image server as soon as possible, as we anticipated that Steemit would likely block access from Hive-related services (which they ultimately did, although they took longer than expected to do it).

Our other near-term goals were to reduce the long term cost of operating the image server cluster and create infrastructure that was resistant to single-point failures.

Image Server cluster components

In it’s simplest form, we could have used one computer as our image server, but such a design isn’t scalable as the number of images that need to be stored increases, and the amount of times those images have to be fetched increases with more users. The final solution we came up with is quite a bit more complicated, but it allows us to easily scale as needed.

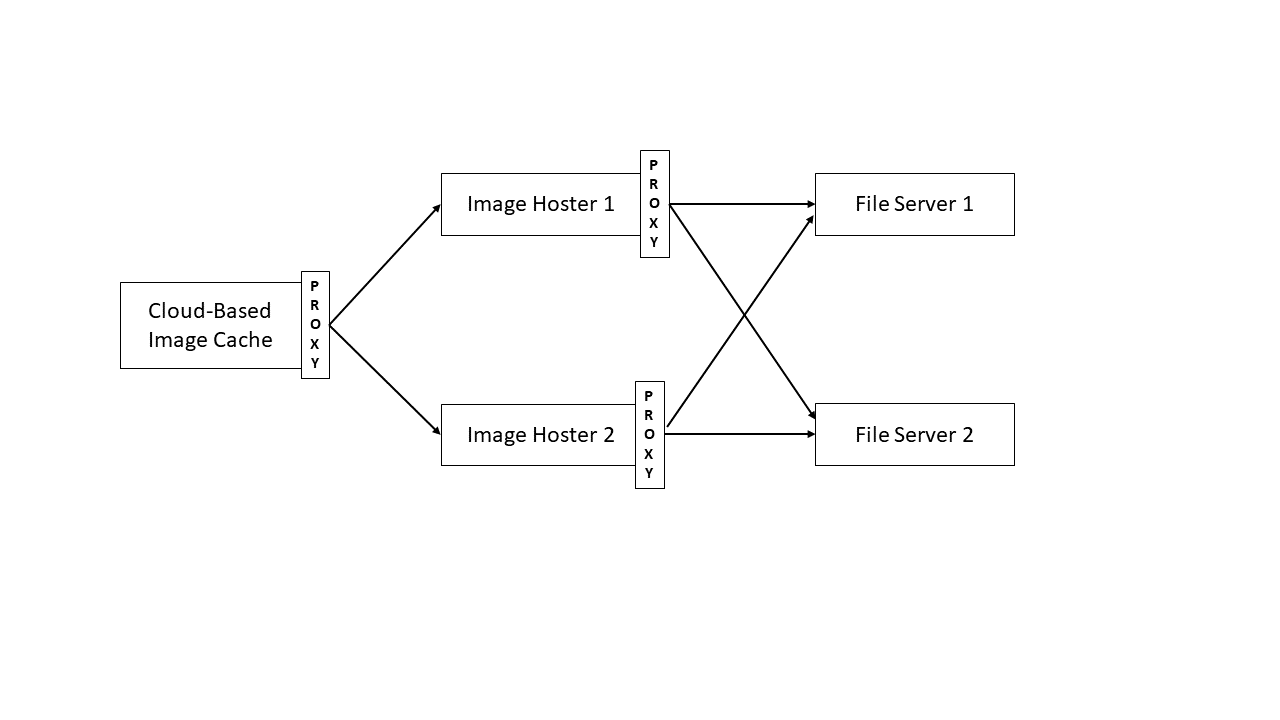

The picture below shows the various servers that compose the Image Server Cluster:

Haproxy (High Availability Proxy) is used to distribute load and detect server problems

We use haproxy at various points in the image server cluster to distribute requests to downstream servers. Haproxy automatically detects when one of the downstream servers malfunctions and distributes load to the remaining servers. It also allows us to easily offline a server or add in an experimental server for testing purposes.

Frontend image cache server

Our frontend system is a cloud-based server using Varnish that caches the most recently accessed images in memory. If an image isn’t in the cache or if a user is posting a new image, then this server passes the request on to one of the imagehosters.

Imagehost servers

An imagehoster handles fetching images from the web and from our fileservers, adding new images to the fileservers, and resizing images for thumbnails. Currently we have two imagehost servers, just for redundancy, as a single imagehoster could easily handle the current load.

Fileservers

A fileserver stores the image files themselves (including thumbnails). The imagehoster was written to store images in S3 (amazon’s cloud-based filestorage system). To save on costs, we didn’t want to use S3 to store the images, so we’re currently storing the images on freenas file servers, using minio to support the S3 api. We currently have two fileservers for redundancy, and @gtg is working on adding a third fileserver as part of his infrastructure. Currently all requests are served from one fileserver, as the load on the fileserver side isn’t very high yet, and the other servers are just there to avoid issues if the main fileserver fails.

Remaining work: improve the fileserver architecture and tune frontend image cache

We’ve had some problems using minio’s synching facility to keep files mirrored between our pair of fileservers, so we plan to explore other options for storing the images. Two likely options are CEPHs and glusterfs.

We also plan to do more analysis of how the frontend is caching images and work to improve it’s performance. This may involve replacing the current caching software, increasing the size of the memory cache, or paying for a CDN (content distribution network) service.

How to vote for this proposal (#105)

Below are several interfaces that can be used to vote for this proposal

First of all: thanks for the work & the top-notch uptime! 🙏 I think many people are not aware of how important an efficient & highly responsive image-server cluster actually is. Spoiler: it's critical.

Now, I've got two questions:

I'm not really a dev-ops guy, so for me to better understand this number: could you please give a more detailed overview of this number? How many hours by how many devs?

What would happen to these if, for whatever reasons, you drop support/development for Hive in the near future? It's prob. more cost-effective to buy & host those yourself, but it creates the problem, where it takes a certain amount of time until that threshold is reached, in comparison to just renting those resources. And even if it's reached: since it's paid via DHF, it should always be able to be used for Hive. (open-source vs closed-source)

I don't plan to provide hourly breakdowns of work done and cost/worker that BlockTrades does because it is too sensitive to publish publicly (BlockTrades does a lot of contract work and contract work represents a substantial portion of our income).

But I can say that the hourly rate we're requesting for this task is much higher than will be typical for our hive-related work, as a large portion of the work was done by our most experienced and expensive personnel.

In an ideal world, we could have waited till someone cheaper was available to do the work, but this work was done under a couple of constraints: 1) we needed the initial setup done extremely quickly because of the Hive HF deadline and 2) we needed someone with direct access to our primary data center.

At this point, some of the work can be passed off to lower-cost personnel (and some is), but I don't plan to drop the main architect of the existing design entirely from the task, not only because he knows the system well, but also because he's extremely talented and has a lot of good ideas for how to improve the system.

It should be noted that we didn't really "want" to do this task. I was actually hoping someone else would take it on as part of the initial hardfork work, but no one else seemed interested, and it was a critical piece that had to be done and done correctly (e.g. to avoid potential loss of images from old posts).

I plan to ultimately get us to a more decentralized solution where multiple Hive enthusiasts are hosting portions of the data in some redundant fashion. But the equipment we purchased will be be used in that system and I don't have any "other" use for it here (we already have plenty of fileservers for our own internal needs). I think it's a better solution to distribute the data among Hive enthusiasts than to centralize the storage on an S3-like solution. Among solutions being considered are https://ceph.io and a privately-run IPFS network.

Thanks for the detailed answer! I think it's important that we debunk any speculation from malicious people trying to justify their reasoning about why the DHF would be abused.

It should be pretty clear that having no image-server is nearly as worse as having one that is not highly reliable. And as you said: nobody else wanted to do the job. Developing & setting it up was probably more expensive than it could have been, but based on the time constraints & the circumstances, we can't expect anything better than what you delivered.

So, again: kudos to you and your team for making sure Hive could deliver on its primary social application and obv. this proposal should be voted for! 👍

Upvoted for visibility. (And BT's answer).

For sure we needed something that works now while we figure out something for the future. I like IPFS and Ceph as technologies. The make-up of IPFS lends itself to having greater decentralization in the image hosting - including, after some dev, low-friction ways for community members to contribute. There was a guy working on something like this for the prequel-Chain, could try found out who that was and see how far they got. Future stuff of course.

I am no techie, but what about using a decentralized cloud storage for this?

For example SIA?

www.sia.tech

some takeaways from what they offer:

It's our plan to look at possibilities of decentralized storage at some point, including potentially using one that involves a blockchain. But at this point, storage isn't looking to be the highest maintenance cost, so we'll be focusing on the frontend first.

Hello.

Thank you for doing this.

Is IPFS already dead?

I haven't seen any mature project that use it at scale.

Using the publicly available IPFS network on it's own is not feasible I think, because I don't think there's a guarantee of images being served. But it might be reasonable to run a semi-private IPFS network operated by Hive-enthusiasts, and we're considering that as an option. But it's just the beginning of an idea, no analysis has been done on it yet.

For me to support this proposal, I'd like to see some way to reduce the centralization risk. Putting images on IPFS and making the currently centralized infrastructure open source would be a good start.

Any updates in this regard?

The infrastructure code is already open source (it's in the main Hive repo).

Just putting it on the public IPFS system won't really do anything useful, IMO, since I don't think the data will be served up at the necessary rate for a useful experience. But anyone is welcome to upload the data there.

What may work better though, is to setup a separate IPFS network that can be supported by Hive enthusiasts (or a similar open overlay network). One of our plans is to investigate what's the best technology for such a network.

As mentioned in the post, some of the other most promising tech is glusterfs and cephs. A few other possibilities to investigate were mentioned by commenters.

The key requirement is high availability of the data, which means we need to have 1) reliable servers and 2) the data is distributed redundantly in such a way that one or two of the servers going down won't make any of the data inaccessible.

Thanks @blocktrades for the info. I am close to supporting this proposal!

Are you talking about the

hive/imagehosterrepository? Would be great to have a list of repositories and potentially even the commit range that this proposal covers.My primary longterm concern is to avoid centralization risk. For example, I want to avoid having the availability of all images dependent on a single entity. If that entity is going to disappear, it is essential that other actors can quickly mirror the images and hosting can be migrated with minimal (no) disruption.

What I like about IPFS is twofold:

To what extent does the current / proposed implementation achieve these goals?

I'm not tied to IPFS. Just I know less about the alternatives.

I see that realistically a single entity likely has to bear the brunt of the hosting demand for now. I just want to make sure it's designed in such a way so that the ecosystem is not forever dependent on that entity for hosting.

Yes, I was talking about hive/imagehoster repo.

We have made some changes to the codebase (not yet committed, AFAIK) to account for performance changes required by the move away from Amazon S3 storage, but most of the work so far has been server setup and experimentation (e.g. devops work) rather than coding (e.g. setup of the infrastructure described in the post such image caching, haproxy, imagehoster, fileservers, etc).

Our plan is definitely to move to a decentralized storage system, but until more work is done, I don't know what form it will take. Figuring that out is the big remaining task, and will require a lot more investigation and experimentation. In the long run, we also don't want to be responsible for all the load either.

Servers, infrastructure, and dev-ops work certainly doesn't grow on trees :)

Keeping the eco-system thriving with top infrastructure is a not just the actual costs we need to allocate for, but the dev-ops labour for on-going maintenance, various planning and implementations.

You're not just funding hours, labour and server costs but the brain resources from years of experience there is great value in that.

I'm glad to see @gtg is being included(Correct compensation wasn't a real priority at our prior chain), so much hard work wasn't completely valued for a good deal of steemit workers.

I believe without the strong support from the likes of @gtg, @blocktrades and others not mentioned here we wouldn't be thriving on Hive and making this great legacy in blockchain.

I support this proposal for continued stellar dev-ops work and resources needed for robust infrastructure 👍

Thanks for all you do keeping Hive alive. Currently my vote doesn't matter but I voted with 500 hive anyway.

The era of the proposal. I am sure we will discover that this era will be slow in funding necessary projects since most in the community will be technically ignorant and unable to or unwilling to make informed decisions.

The one benefit of Steemit (although @ned was terrible) is the company did not have to pitch each expense to the masses. In the future a more workable solution will probably involve electing a sort of board of directors who can then direct budgets based on the actual needs of running a decentralized blockchain.

Unfortunately there is already too much drama between proposals and the community at large. I hope this changes overtime or at least those who understand the actual cost of software/ hardware development have the necessary Hive stake to push proposals through in a timily manner.

I know I am not qualified to know what is a necessary expense and what is wasteful but I do have developers in silicon value so I at least know what their time is worth from Google programmers to first year start ups. Just the one friend has been paid and is currently being paid hundreds of thousands of dollars for full time work and certain members of this community are making a big stink about $20 to $30k of work...

Rant done. Here's to hoping for the best!

It would be nice if proposals like these had a more in-depth cost breakdown for clarity and transparency. Large categories lumped together make it hard for the community to understand what all went into those costs being asked for in reimbursement.

A more in-depth breakdown of the billable hours. Thank you for waving your hours spent on this project.

A breakdown of major hardware costs like the $10.5k in equipment purchases. What happens to this hardware if it’s no longer in use? Maintained costs already incurred and projected monthly costs?

A monthly breakdown in server hosting costs already paid and a projected cost moving forward for the remainder of the proposal it covers. Is there a built-in 10-20% buffer for unexpected costs? What happens if the funds fall short of covering costs? What happens to any remainder excuses? Will these shortages or overages be rolled over into the next proposal?

Understandably, there would be startup costs to get everything rolling. So I am supporting this.

The DHF will be hitting a point at some point where people asking for 10k, 20k, 50k and even greater amounts with vague ranges of costs will start to be problematic for the community to determine what proposals are offering the greatest value and where the community needs to work on getting a better deal.

Vagueness also prevents the community from leveraging it’s a wide range of knowledge and connections to help people get better deals if possible for projects who are being paid out from the DHF.

This is a high level functional design and proposal. I think there are quite a few around here who could digest the details of what your are trying to accomplish. Do you have a more detailed Statement of Work (SOW), and Bill of Materials (BOM) for this project? Does this cost constitute a rough order of magnitude estimate?

Rather than try to estimate it, given we didn't know much about the software when we started, we just decided to do it first, then report on the cost afterwards. Doing the work in advance does increase the risk of not being funded for work done, but it has the advantage that we didn't have to speculate on the amount of time it took.

But it's true that we haven't done all the work yet. Of the remaining work, the most unpredictable is the amount of time it will take get the fileservers synching the way we will want, and if that will require more complicated software like CEPHS or glusterfs.

Reduce the trading fees and ill consider voting. 😉

Pretty ironic you're not questioning this one like you did the one from @justineh, eh?

Is it because she's a woman?

Is it because @blocktrades has more voting power?

Did you just need to take a retarded stance against something?

It's obviously not about the amount or value. So, what is it? Are you sexist or are you just a bitchy troll?

best wishes for you!

All that works for the best of the HIVE functionality are more than welcome. about the price of this proposal and some we have seen lately, I guess they must be judged by people with some expertise regarding the topic at hand. All for the best of HIVE. I wish you good fortune in the wars to come.

Sure I will vote for it. Thanks for your work!

What is the cost to store on s3?

Is it that much more than 63k?

Serving from s3 is a long term cost. And there's two separate cost components, storage and data serving. The goal of this work was to cut down the long term cost. Most of the 63K is a one-time cost associated with that effort. Also note that if we had stuck with S3, we still would have had costs associated with setting that up as well.

Sure, I will vote for it.

Just a question here, could it perhaps decrease the capacity required if we were to limit the images on posts to perhaps 5, or 7 images max?

The current storage costs aren't that bad, and limiting by post probably isn't useful (after all, people could just make more posts).

Yes instead a little problem could be the repeated images. I guess a lot of users upload the same image multiple times instead of using the link of the image. For example when they write a post and modify the post deleting an image, adding some text and then update again the image.

At the beginning I did it too. :)

Is it a problem for you?

No, as long as it's the same image, it's not a problem, because the system automatically detects duplicates.

Oh that's good...better like this, but it check just the name of the file isn't it?

No, it checks the actual contents of the image, not the name of the file.

Wow cool!! ;) Thanks for the info

Okay, I understand and it was just a thought.

Did the vote in any case, as you guys do important work to improve Hive.

Take care!

This is a no brainer. Hive wouldn't have happened without this.

I place high resolution copies of many of the photos that I use on my personal web servers.

As you rework the image servers, I've wondered if there is anyway that I could get the original image on my set set as the canonical source.

I have also wondered: What is the size that Hive prefers for images?

Not sure on the canonical issue, but maybe someone else will have a thought about it. You may want to add a gitlab issue for a discussion about it on condenser.

Currently the image server is set to take images up to 10MB in size.

Do you have a problem if I translate your post?

You are welcome to translate it.

I'm torn on this. Like Justine's proposal, I'm not a fan of dishing out funding for work already completed without the expectation of funding. But your company has done a lot for this and the last blockchain as far as I can tell. It's a "large" amount, but I'm really unsure of whether it is a reasonable amount. Perhaps someone can convince me.

Personally, I'd prefer that more work was done, then funded, when possible. With "proposed" work, there is always a higher risk that the work will never be done. But for a lot of people, getting paid after the work is done isn't an option, of course.

Thank you. Paying for work already done as apposed to funding something that may not deliver (the final say so could well be out of the hands of the worker e.g. Exchange listings) make far more sense to me.

Who wouldn't opt for this as a business if they could!?

Ok

The image situation just has to approve. Given this is a good solution I am inclined to vote yes on it unless someone has a better solution.

I have voted yes to this proposal.

Hi is it possible to make a detail breakdown of our server costing? A table with all numbers will be appreciated. Not just simply a draft. Whats going to be improved?the milestone, the issues etc.

Thanks for all your work to further this blockchain! Voted for the proposal.

I think it might help alleviate the concerns of some people if there is a more detailed breakdown of the costs - what was done, roughly how much time it took (or is expected to take) and the hourly rate. This would also give you the opportunity to include the pro-bono work you have done which I think has gone unnoticed. Any contract with a company would include an invoice with a cost breakdown (including any pro-bono work), so I think we have to learn to do this when the company paying for the work is actually a decentralized community. It seems like it would alleviate a lot of concerns and create more accountability and transparency. My guess is a lot of the negative feedback people are voicing is really about them wanting a bit more clarity as to what exactly was done and being charged for - it's a bad experience to be left in the dark (even if it was unintentional) so I can understand why they are reacting this way.

👍🤣😉

aqui mi visita desde venezuela,espero este bien,con muchos tropiezos llegue hasta aqui para compartir con ustedes saberes y conocimientos,espero contar con su apoyo ya que esto es una fuente de ingreso para mi que necesito tanto,saludos amigos y espero contar con sus visitas

Congratulations @blocktrades! You have completed the following achievement on the Hive blockchain and have been rewarded with new badge(s) :

You can view your badges on your board and compare to others on the Ranking

If you no longer want to receive notifications, reply to this comment with the word

STOPDo not miss the last post from @hivebuzz:

Vote for us as a witness to get one more badge and upvotes from us with more power!

Let me copy relevant paragraphs from my article about current proposals

I think that in your position you should be the one setting a good example for the rest of the proposals. I think that your approach “the labour is approximately worth 50k HBD according to my calculations” is objectively wrong way to do it. How can any sensible investor (that would want to judge this proposal without knowing who you are and what you have done for most of the DPOS communities in the past) make an educated decision that would lead into an approval of such a proposal? Each investor should be interested AT LEAST in questions such as:

Those are all super important questions. We need DETAILED proposals not vague proposals. After all “Image server cluster” is being worked upon for one month and will take roughly one more to finish correct? 50 000HBD for 2 months seems like a massive overkill in my book provided I don’t know how many people for how many man-days we are paying and whether there aren’t cheaper and equally good alternatives (that could be hidden in crypto projects too).

It is a large amount of money to be paid out, but I don't see a good alternative for image hosting.

I have translated the proposal: https://peakd.com/hive-121566/@satren/translation-proposal-entwicklung-und-wartung-des-image-server-cluster

And another question, is the #hdf set on purpose? I thought the DHF is the new name for @steem.dao

Thanks for runner ng the ball down the field for us!

Why am I blocked from trading?

Something about a whitelist?

Shouldn't my account prompt me?

Where are you blocked from trading? Are you talking about on our site? And if so, are you trying to buy hive or trade.hive?

I found it, I was misreading the assets.

Hi, I would like to ask a question, please tell me please how the curatorial program works on the platform Hive ? Your own or some other, because i don't understand some things about it, given some observations, thank you )

I believe someone is updating the whitepaper that explains how curation works. Probably best to wait for that, or search for some posts on the subject, which can explain in better detail than I can easily do.

I'll pitch in with a suggestion to look on Tardigrade.io.

It's Decentralized Cloud Storage, which is based on the file distirbution network of Storj.io.

It's less expensive than AWS.

So, we are paying for a centrally controlled server purchase that's yours?

Could be possible to give the user the option of using 3rd party services for image hosting, and also the option to host the images on Hive enthusiasts IPFS servers (also could give some sort of reward for the hosting service).

Sure, a site could always do URL rewrites I think, and pull images from a different source with a similar URL layout.

I love how Hive has so many eras.

We are now in the proposal era hahaha. Man, I love having so much homework because of the skin in the game.

We need to target people in countries who are looking to exit their fiat into almost anything, they won’t mind powering up hive... granted they have any money to begin with.

Free and open cooperation projects always carry the risk of financing.

That is, they go forward hand in hand with volunteers and end up dying. I'm not saying it's fair. Absolutely not. But it is reality.

I have been working and collaborating for years with free and open source software projects: Linux, Gnu, various distributions.

The only way to carry them out is by vocation with selfless effort,

For example, only Ubuntu, from the hand of a billionaire, has managed to detach itself and assume a commercial profile on the one hand and free on the other.

I wish you the best of luck. But it is very difficult.

You own 5 million HIVE and you are a witness. You own an exchange, which a big part of the HIVE community uses.

Why don't you just provide value to the chain, pump you own bags (and your witness earnings), and promote your exchange. Why go for the 50k in labor costs? I get the computer and server costs, but not the labor.

When you designed the SPS, you asked for 100k. Image hosting is 50k?? Do you realize how important the SPS was to Steem compared to image hosting?

I appreciate your work for HIVE but I'm never voting this. What you should do is provide value and be an example. Don't bill every line of code you write.

I didn't even add any of my own time spent to the billing for this, just the work done by our staff. But despite not billing for my own labor, labor is going to be the main cost for most of the work we do. So essentially you're asking us to do the work for free. Do you expect freedom to also work for free? Maybe you should contact him and get him to do the work, since he has a larger stake that blocktrades does.

Witness earnings wouldn't begin to approach the costs, nor are they meant to, that's in fact why we have a proposal system now. There was a time long ago when it was suggested that witness pay could pay for development work, but that was before witness pay was dramatically cut (and it wasn't really working well even then).

As to the SPS vs imageserver work, my billing method is by cost, not by expected benefit. It's hard to measure benefit, and it's not always fair to bill that way anyways, IMO, when it's work that someone else can potentially do.

Labour cost is almost always the most expensive cost!

Witness earnings are low now yes. Which is why I would have voted to cover the costs of servers. A small amount for labor as well, maybe. But this is too big.

What will the rest of the year cost, a million? It will be worse than the Steemit, Inc dumping in the end.

Also, I don't know why but I get the feeling that the labor costs are just a nice profit, not a necessity. Maybe because the proposal was posted after the work was done? I don't know.

It would have been better to ask for the money before starting, that way, you would know if people would want to vote for a 63k proposal for improved image hosting.

I also know that you did more work than what was written in this post (I listened to the core dev meetings). I guess the rest can be free? Or another proposal will be posted?

And finally, of course I don't expect you to work for free. But the labor you and your devs put in the blockchain is an investment. Higher price means more witness earnings, a larger stake and more revenue from the exchange. If you don't believe that the price will rise in the future then let's give up now because all of this is unsustainable. The SPS will run out in the end.

Anyway, we can argue for days. In the end votes will speak for themselves, and I don't mind if you get funded. I just think it's too high of a labor cost.

Edit - about freedom, if I was him, I would 100% be working to pump my bags. Maybe he is invested in tons of coins and doesn't care. It all depends how important this stake is to him.

If it will reassure you at all, I can guarantee our costs will be far lower than Steemit's, and we'll do a lot more.

You know what I said, it is on the chain!

No one I know has admitted to knowing who owns the freedom account. There's some obvious linkage to BitShares community (the blockchain code from which Steem was derived) in early voting patterns. But beyond that, no one knows.

As far as the alpha account goes, I use it to manage non-exchange related financial transactions. It's not exactly hidden, as the account has memos like "refund from blocktrades", etc.

It was originally intended to be my personal posting account (I have an account with the same name on BitShares). The origin of the name is I wanted a name that indicated I had a part in the creation of BitShares blockchain (i.e. alpha as in beginning).

Thank you for the information..

quick question; I see the current daily budget of DHF = 4,899.595 HBD; and currently 6 proposals are funded totaling 805 HBD/day. So, is the reminder of the daily budget burned or saved for a later use?

Related, Is there any plan to introduce a public audit trail of the DHF? That will help with the recent outcry I think.

It will at least help me, if I get a post detailing the DHF funds: Source, Utility and Audit from you. Many thanks!

Maybe I misread your comment a bit. Are you asking for a post on details about how the DHF works? If so, here's an old post on the subject: https://hive.blog/blocktrades/@blocktrades/proposing-a-worker-proposal-system-for-steem

I was. So you read it correctly. And I did read the post. My question is, is there any changes to the system since that post is written during the steem days.

More specifically I was asking, how a general public find a basic audit of the DHF. Is it possible to implement a audit trail, or is there any plans for it?

Also almost an afterthought, is the equivalent hive related to Steem INC. 's stake part of the DHF? What's its status and potential use?

The actual mechanism for generating new funds via coin creation was decided after that post, but ultimately it was decided to use 10% of the author/curator reward pool to fund it (IIRC, Steemit coded the final version after discussions with community witnesses and large stakeholders).

Any of the frontends could add some dashboard with more info about the fund (i.e. I don't think any blockchain-level changes are needed). I think the main issue would be to figure out what information would be useful to display.

In the airdrop process, an amount equivalent to the Steemit stake was airdropped to the DHF as Hive, keeping the supply of Hive the same as Steem had.

Currently it is not spendable, as the DHF can only fund proposals via Hive Dollars, but there is an open issue being worked by @howo to add a process that periodically converts some of the Hive in the DHF account to Hive Dollars. I'm not sure yet if that new code will be included in the next HF or not, mostly depends on when it's fully tested I suppose.

There's also an issue of deciding how fast that conversion should take place. I've seen suggestions of between 3-10 years.

Thanks! This a helpful. I need to bookmark this reply for future reference.

For the record, although I did a fair bit of the initial implementation @netuoso has taken over and finished development for that feature. We just need to do some more tests.

Any funds from the daily budget that aren't consumed by proposals higher than gtg's refund proposal are automatically returned to the fund. So the funds available are slowly growing as long as some is being "refunded" by gtg's proposal(because in this case more new funds are being created than being spent). Currently the available funds are growing.

At some level, the DHF is auditable now (all the data is in the blockchain and accessible via block explorers), but I guess you're suggesting that some of the frontends present the information in a clearer fashion.

Yesterday I created an issue to improve the view shown on condenser, but the immediate plan is just to make it easier to see the proposals and where they stand.

Maybe take a look at the existing proposal displays and add a new issue in condenser with the specifics of what data you'd like to see.

Exactly what I was asking. Thank you for confirming. So it is now clear to me that the daily surplus gets added to the DHF and its size increases.

I was thinking about a display (visual) a tool perhaps, that plots an input and output to the DHF. I know the data exists... but that is simple front end will be useful for the average joe (like me) to see and understand.

Have you considered Backblaze B2?

Performance is as good as Amazon, and pricing is considerably less. They also just announced full S3 API support so it can be a plugin replacement for Amazon S3 now.

If you do stick with a NAS solution, have you given any thought to ZFS and ZFS Replication?

At the moment we're using freenas OS, which uses ZFS. We haven't looked at backblaze b2, but might be an interesting alternative. I'm also interested in potential "decentralized" alternatives that just let the hive community do the hosting in some distributed fashion, with some guarantees on data redundancy.

I like the idea of decentralized community hosting, Siacoin style.

Perhaps an application similar to the Sia app could be built, but for Hive instead. You would log into your Hive account on the app and allocate some amount of disk space to be used for distributed file storage.

The blockchain would divide up a daily reward amongst everyone contributing their free disk space.

I imagine this would be quite an undertaking to design and develop, though. But I bet it would make Hive stand out even more.

If instructions or setup details are shared, I think it could be great for every app to have their own setup.

Backblaze seem interesting, I am trying it out on our instance.