Hi Steem people!

Hope you are all doing fine, I wanted to share with you some thought I have about the connections between artificial intelligence and the art of draftsmanship. I intentionally use this rather archaic "draftsmanship" term over "drawing" in order to differentiate drawing from observation rather then from imagination.

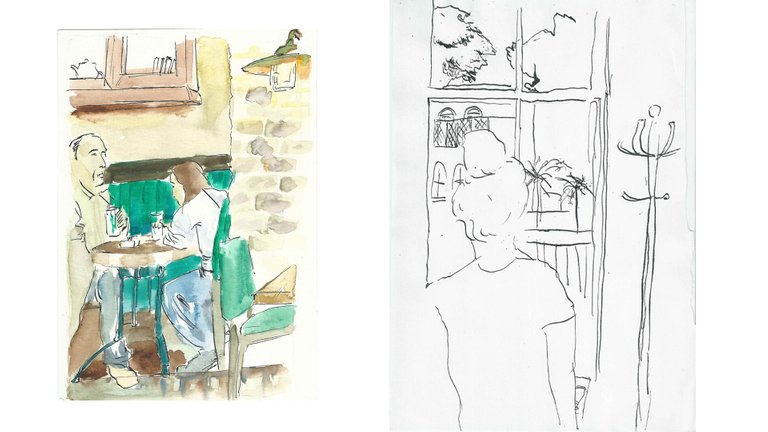

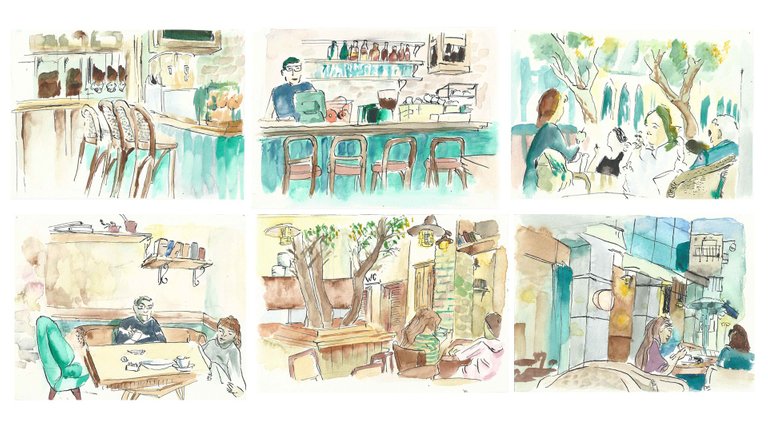

These two pictures are of a series of drawings I did on my second year in design academy, 6 years ago. in both cases I had to draw really fast, trying (the best the I could manage) to only take 5 minutes for each one. since most of the people drawn held their position for a brief couple of seconds, I had to greatly generelize what I saw and complete some from memory. though not entirly in order, it is visible in the frames that the light settings have changed during my sitting- this comes from the fact that I've spent a couple hours on the location while the sun set. drawing in "plein air" requires making a lot of intuitive decisions. by this you could render the illsuion of depth, movement and time passage in very few strokes.

While drawing can be used to describe any act of putting marks on a canvas, or a VR space, a draftsman only captures what he sees. but here it is important to distinct the way a draftsman "sees" his subject matter from normal vision.

A Camera, for example, captures the light reflecting of a scene in a veracious, neutral manner. the artist, however, transcribes the way his brain understands the image reflecting towards his eyes. by doing so the draftsman reveal the way in which his brain discriminate shapes and contours in his field of vision. while the image of a camera is affected only by instrumental mechanics such as exposure time and the sensitivity of the photography film (or digital sensor), the artist sees the world through a set of preconceived, abstract notions: depth, shape, contrast, rythem, contour and spots. contours (lines) and color spots (B/W tones included) are the "tools" of an artists to convey a sense of 3D reality through a 2D surface.

unlike a simple camera the human brain has evolved to contain a uniquely large visual cortex, that is very specialized in identifying these features. that is very similar to the approach that goes on in a branch of artificial intelligence study called "machine vision". I will try connect both together shortly. for now let me state that our eyes have a bias:

The eye scans light in very rapid movement called saccades , up to 900 degrees in one second and our iris muscles constantly contract and protract to allow varying amounts of light in. our mind interprets those features way before our conscious brain even had the chance to categorize the things we see. none of those feature are REAL, but they exist as a medium through which we sense reality as it relates to us. the way I choose to put it, is that we actually see with our mind's eye.

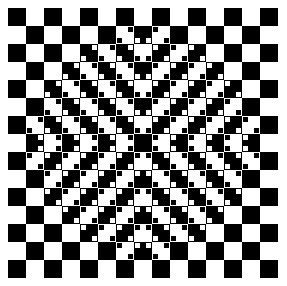

our brains constantly interrupt and adjust the image we see in lightning speed- though our point of focus is very narrow (only about 1–2 degrees of vision), and our eyes are very light sensitive, the persistence of vision makes us perceive a complete image of our surroundings. illusions such as 'simultaneous contrast' happen naturally in our brain, as it compensates for the lack of order in the incoming light information. our mind completes missing/partial information automatically and to a great extent.

(more about simultaneous contrast here)

The lines are a completly straight angle. I had to verify it myself!

No, you don't have a 'strong mind' lol. you simply have a working one, which is amazing in it's own right!

the beauty in drawing from observation, is that an artist can "sync" up his brain with that of the viewer: meaning, he can relate a sense of time passage- which is the time it took the artist to see his subject. he can convey the way he "saw" what he was drawing; and so the image "reads" with the same emotion, state of mind and sense of temporarily that the artist felt when he drew it. this was very well explained to me once by my drawing professor in art academy. his name is Eli Shamir and here's a link to his business fb page:

https://www.facebook.com/Elie-Shamir-%D7%90%D7%9C%D7%99-%D7%A9%D7%9E%D7%99%D7%A8-1167499229964451/

A different kind of drawings, portraits that were done from photographs

Here are parallels that I see between drawing and AI:

Like in drawing, an A.I software is often used to extract features from raw data, and use it to identify/categorize/manipulate data. just as humans see patterns and features in everything, the goal in designing A.I is to be able to perform tasks based on acquired knowledge. in us humans, some features are 'hard coded' in (by evolution), some are learned while growing up. the human mind is a fantastic pattern learner. we do it in every moment of our waking existence, even when we dream. most brain activity goes on 'under the hood', meaning unconsciously.

I became increasingly familiar lately with a branch of AI called deep learning. and I'm sure many others did too, this is the new trending topic in computer technology right now. it mainly deals with computing models: simply a way to design code, like an architecture or structure of code.

My favorite sources for learning about this topic are two youtube channels:

#1 Siraj Raval's channel:

https://www.youtube.com/channel/UCWN3xxRkmTPmbKwht9FuE5A

#2 Two Minute Papers with Karoly Zsolanai-Feher

https://www.youtube.com/user/keeroyz

Both are amazing!

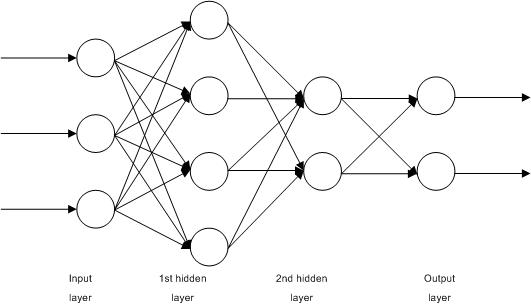

no significant change in computer hardware has heralded the deep learning revolution, just an increasing availability of data storage space and faster internet speeds worldwide. this method of A.I, tends to employ "neural networks"- which are, again, a computer model inspired neuroscience.

A visualization of a neural net, source:Wikipedia Commons

In a neural network, functions that store and process data are arranged in nodes- similar to neuron and synapses in the human brain. neural networks have been employed extensively in recent years by the tech giants (Google, Amazon etc.) and are used by billions to process information. they are special because they can "learn" features on their own. the brain analogy works well in simplifying this process: the data 'flows' through nodes like electrical signals flow through our brain cells. the paths that are carved in our brains by the flow of information (often reactivated routs) form channels

that persist over time. those channels become features, and they give us a result: weather a thing is A or B or C etc..

there are different methods to make an A.I 'learn'. one such is supervise learning- giving the A.I a set of data consisting of input and output, and object and a label. with a large training data set you feed the A.I with labeled information, and monitor the A.I's predicted label for each item. you iterate the process until the percentage of error is minimized and the A.I predicts the right labels for the right items. an algorithm called 'back propagation' is used to see that the error rate decreases. after training, you feed the A.I "unseen" data and see that it is able to generalize the information and use it's acquired features to label the information correctly.

But there are ways to make an A.I not just categorize information, but also GENERATE IT. there were some mind boggling examples of research papers that dealt with the topic recently, such as this:

I see drawing as very identical to a generative A.I model called "style transfer". Style transfer is to take raw data input, such as an image, and regenerate an output image that mimics a given style.

For that to happen, both an input image and an input style image are fed into the model. the A.I tries to form an image that looks like the first one, in the style of the second. a different network is assigned to each images, and both extracts the features from the images. another mathematical function is used to locate the recurrence of features and their locations. So one network studies the features and the other studies the style. put the two together and what have you got? A program the can understand "cross-domain relations". needles to say, our minds perform this function superbly. that's why it's such a sought after trait to replicate in A.I.

A method that gives even better results is called Generative Adversarial Network (GAN). this model employs two rivaling networks that compete with each other- one is called a generator and the other is called a discriminator. the first generates an image while the other judges whether the image looks authentic or not. a feedback loop occurs between the two networks, as they compete and learn from each other to produce better results.

This process remind to me how an artist 'updates' his knowledge of reality from observing new phenomena, and from criticism that originates in the minds of others. thus, when the artist look again at a new subject matter, he can form a better 'realer' representation of it.

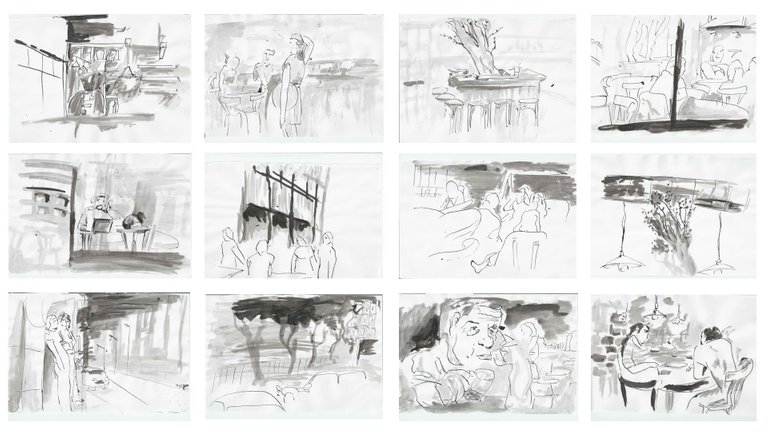

Yet another set of drawings, this time with 15 minutes for each one.

Yet another set of drawings, this time with 15 minutes for each one.

I encourage the readers that made it so far 😄😄 to comment and add to my knowledge or content what I've written. admittedly, I'm not a computer science scholar by any strech of the imagination but machine learning is a personal passion of mine, along with drawing and illustration. so the language that I've used to describe my knowledge may be very inaccurate, and so I invite those who are more versed in it to correct me.

Thank you SO MUCH for reading and devoting you time and attention and support!

Have a fantastic weekend!

@originalworks

The @OriginalWorks bot has determined this post by @uv10 to be original material and upvoted it!

To call @OriginalWorks, simply reply to any post with @originalworks or !originalworks in your message!

Congratulations! This post has been upvoted from the communal account, @minnowsupport, by uv10 from the Minnow Support Project. It's a witness project run by aggroed, ausbitbank, teamsteem, theprophet0, someguy123, neoxian, followbtcnews/crimsonclad, and netuoso. The goal is to help Steemit grow by supporting Minnows and creating a social network. Please find us in the Peace, Abundance, and Liberty Network (PALnet) Discord Channel. It's a completely public and open space to all members of the Steemit community who voluntarily choose to be there.

If you would like to delegate to the Minnow Support Project you can do so by clicking on the following links: 50SP, 100SP, 250SP, 500SP, 1000SP, 5000SP. Be sure to leave at least 50SP undelegated on your account.

Your Post Has Been Featured on @Resteemable!

Feature any Steemit post using resteemit.com!

How It Works:

1. Take Any Steemit URL

2. Erase

https://3. Type

reGet Featured Instantly – Featured Posts are voted every 2.4hrs

Join the Curation Team Here